According to the State of Cloud Native Development report by CNCF, as of Q1 2025, nearly half (49%) of backend developers are working with cloud-native architectures – that’s around 9.2 million specialists. This shift toward microservices, containers, and DevOps practices reflects the maturity of the approach and its steady demand across the business landscape.

Against this backdrop, Go continues to gain popularity – in May 2025, it ranked 7th in the TIOBE index. That’s no coincidence. Go naturally aligns with cloud-native principles: it’s easy to learn, works seamlessly in containers, and integrates closely with most popular tools.

In this article, we’ll delve into the factors that have solidified Go’s reputation in the development community and examine scenarios where it truly shines.

Contents

- Cloud-Native Development: Definition, Сharacteristics, Requirements, Market Position

- How to Choose the Best Language for Cloud-Native Success

- Why Developers Choose Go

- Cloud-Native Architecture: When It Adds Maximum Value

- Conclusion

Cloud-Native Development: Definition, Сharacteristics, Requirements, Market Position

In the past, software was typically built using a monolithic architecture. This means the entire app was developed as a single, indivisible unit. It ran on one physical server, which required regular maintenance and often led to additional costs related to hardware, downtime, and scaling.

Cloud-native is a different approach. It means creating software specifically designed to run in the cloud. The cloud refers to remote servers provided by companies like Amazon, Microsoft, and Google (but there’s no need to commit to a single infrastructure or become dependent on a specific vendor). These servers can store data, run applications, and provide computing power without the need to buy or maintain physical hardware. For developers and businesses, this means they don’t have to worry about setting up or maintaining their own infrastructure.

Instead of one large application, cloud-native systems are usually made up of small, independent parts (called microservices) that can be updated or expanded separately. Applications built using cloud architecture are reliable, scalable, high-performing, and help bring products to market faster.

Cloud-Native vs On-Premise

Cloud-native apps are usually designed with modularity in mind. This means teams can update parts of the system without disrupting the whole thing, making it much easier to add features or improvements. On-premise apps, however, are often built as one big piece (monolithic), so changes in one area can have ripple effects across the entire application.

Automation is a big deal in the cloud-native world. Things like CI/CD streamline updates and deployments. While you can automate on-premise setups, it’s often more complex to roll out changes.

Scaling is another area where cloud-native shines. Need more resources? Just dial them up on demand. No need for new hardware. On-premise scaling, on the other hand, means buying and installing servers, finding space for them, and potentially reconfiguring your entire infrastructure.

Cost-wise, cloud platforms usually operate on a pay-as-you-go basis – you only pay for what you use. On-premise solutions require a big upfront investment in hardware and ongoing maintenance. Plus, if demand drops, those underutilized servers become a money pit.

Finally, with public cloud services, the provider handles all the hardware maintenance and updates, freeing up your team to focus on building your product. On-premise means you’re responsible for everything, but it does give you complete control, which is crucial for organizations with strict security requirements.

| Aspect | Cloud-Native Applications | On-Premise Applications |

| Deployment | Run in cloud environments using third-party infrastructure. | Installed and run on the company’s own physical servers. |

| Architecture | Built with modular components (microservices); easier to update and expand. | Often built as monolithic systems; changes are harder and riskier to implement. |

| Automation | High automation with DevOps practices like CI/CD to streamline updates and deployments. | Automation is possible, but deployments are typically more manual and complex. |

| Scalability | Easily scalable, resources adjust automatically based on demand. | Scaling requires buying and installing new hardware, which is time-consuming and costly. |

| Cost Model | Pay-as-you-go: pay only for the resources used; better suited for variable workloads. | High upfront investment in hardware and ongoing maintenance, regardless of usage. |

| Maintenance | Cloud provider manages and maintains physical infrastructure. | Company is responsible for maintaining all hardware and infrastructure. |

| Control and Security | Less control over physical infrastructure, but typically compliant with industry standards | Full control over data and infrastructure. Essential for organizations with strict security. |

What’s Going On in the Market

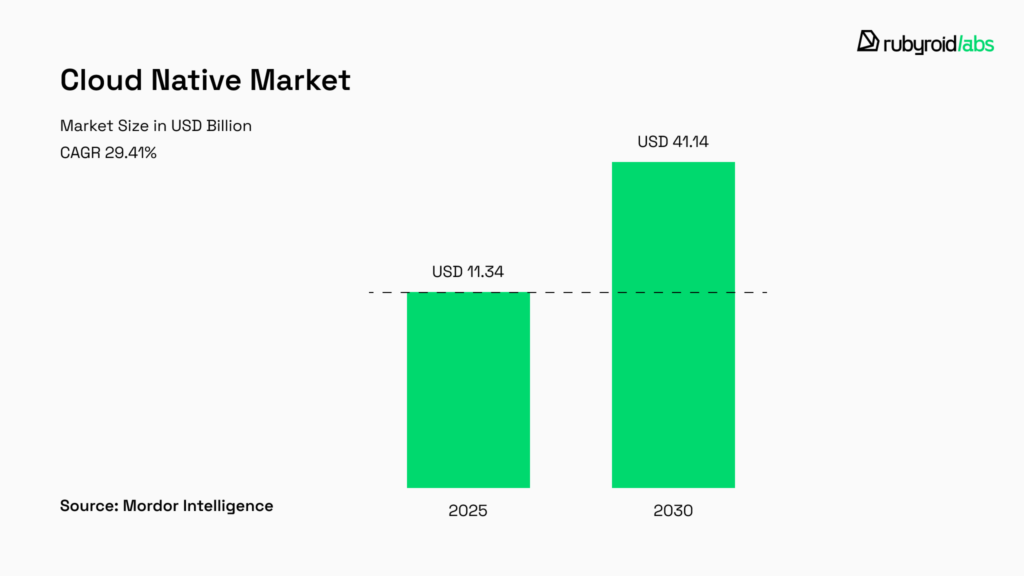

The cloud-native application market is entering a period of accelerated expansion, with a projected compound annual growth rate (CAGR) of 29.41% through 2030.

The latest CNCF Annual Survey reveals a clear shift: cloud-native technologies have become mainstream, with 89% of organizations now adopting them – a record high. What’s notable is that this growth spans companies of all sizes, from startups to enterprises, signaling widespread confidence in the cloud-native approach.

Security has also taken a front seat. Compared to the previous year, respondents reported meaningful progress in strengthening their software security posture.

What Makes a Language Fit for Cloud-Native

The language you choose directly impacts how quickly you can develop applications, how efficiently they perform in a distributed environment, and how easy they are to maintain. To suit Cloud-Native demands, a programming language needs to meet at least three key requirements.

Speed & Performance

Cloud-native environments thrive on agility. Teams need to ship updates quickly, test ideas, and scale features as needed. So the language has to support fast development. This comes down to having a clean syntax, a rich library, plenty of tools, and an active community.

But speed of development shouldn’t come at the cost of performance. Cloud-Native apps run under high load and need to use resources efficiently. If a microservice uses too much memory or responds too slowly, it drives up infrastructure costs.

The ideal language strikes a balance. Golang is a strong example here, it compiles to fast, lightweight binaries but keeps the syntax simple and readable.

Efficiency in Distributed Systems

Cloud-Native is inherently distributed. Apps are made up of many components, running in different containers, on different servers, and often across different data centers. These components need to communicate, handle failures, and stay in sync.

The language should assist developers in managing these complexities by making it easier to work with networks, APIs, serialization, and error handling. In addition, built-in support for asynchronous programming, multithreading, or concurrency would be a significant advantage.

For example, Node.js has an event-driven and asynchronous architecture, which makes it well-suited for interacting with external services. Golang has built-in support for goroutines and channels, which help to simplify coordination between different components in a distributed system.

Low Overhead for DevOps

Development is not limited to writing code, it also involves the process of deploying, updating, and maintaining. Apps need to run in containers, integrate smoothly with CI/CD pipelines, and be easy to monitor and log.

Languages should make it easy to build small, self-contained artifacts. Go excels here too. It compiles into a single binary with no external dependencies, which simplifies containerization and keeps image sizes small. In contrast, languages like Python or Java often require more setup and dependency management.

Mature ecosystems are also vital. The more tools available for testing, logging, tracing, and metrics, the easier it’s to integrate with DevOps workflows. The smoother the fit, the lower the cost of supporting the app in production.

How to Choose the Best Language for Cloud-Native Success

If you’re hoping for a single, definitive answer to the question, you won’t find it here or anywhere else. This is not without justification.

Several factors determine the best choice: the architecture you’re building, the cloud provider you’re using, existing skills within your team, and even your long-term hiring strategy. No language is universally better; each comes with its own trade-offs.

What we can do, though, is look closely at the languages that have become common in cloud-native projects and compare how they perform in real-world use. The goal isn’t to crown a winner, but to help you understand what each language offers and where it might best fit into your own cloud-native strategy.

Python

Python is a favored option for developing a broad spectrum of cloud services, thanks to its vast array of libraries. This includes tools like Flask and Django for web development, and TensorFlow and scikit-learn for machine learning. It’s a great fit for automating tasks, writing scripts, and analyzing data, which makes it a critical tool for managing cloud infrastructure and rapid prototyping. Its compatibility with major cloud platforms further strengthens its position in cloud-native solutions.

It’s heavily used by companies like Instagram to power its large codebase. Python is a key component in Netflix‘s AI/ML initiatives, driving features like personalized recommendations and video quality enhancements. Concurrently, Cisco depends on Python scripts to automate its user management tasks. Dropbox has also built much of its architecture with Python. Its dominance is especially strong in backend development within cloud environments.

Considerations: As an interpreted language, Python typically has lower execution speed compared to compiled languages (read more in detail in our article Go vs. Python), which can be a drawback for performance-critical applications. Its dynamic typing may lead to runtime errors and unexpected behavior, potentially requiring more frequent testing and debugging. Python’s Global Interpreter Lock (GIL) limits true multithreading, making it less effective for CPU-bound tasks and often resulting in higher memory usage.

Java

Java boasts a mature ecosystem, most notably Spring Boot, which is used to build microservices and cloud applications. Java’s automatic memory management (garbage collection) and robust multithreading support contribute to its reliability and ability to handle distributed processing.

Netflix, a pioneer in microservices architecture, relies heavily on Spring Boot to run its backend services, enabling it to serve millions of users and scale efficiently. Amazon (real-time order tracking), Intuit (scalable data analysis microservices), PayPal (payment processing), and eBay (inventory management) all use Spring Boot in their microservices architectures. A 2023 Dynatrace survey identified Java as the leading language for Kubernetes application workloads: 72% of organizations use it to some extent, and 65% of workloads run on the JVM.

Considerations: Java applications, particularly those running on the Java Virtual Machine (JVM), can have high memory overhead and slower startup times compared to languages like Go or Rust. This can affect cost efficiency in serverless or highly elastic environments where instances are frequently spun up and down. While general-purpose Java is free, some advanced features or commercial distributions may require a paid license. Additionally, Java code can be verbose and more complex to write and maintain.

JavaScript (Node.js)

The core design of Node.js makes it uniquely suited to specific cloud patterns, especially those requiring high concurrency and low latency for I/O operations. Node.js’s key architectural strength lies in its event-driven, non-blocking I/O model, which allows it to handle multiple requests concurrently and efficiently.

Cloud apps often involve large volumes of parallel I/O-related operations, such as real-time user interactions, API gateways, data streaming, or microservices communication. In these scenarios, the ability to manage numerous open connections and respond quickly without blocking the main thread is critical.

Node.js is widely used for serverless architectures. Major companies such as Netflix (for designing user interfaces), LinkedIn (enhancing the mobile experience), Trello (real-time updates), Uber (building resilient, self-healing distributed systems), eBay (maintaining live connections to servers), PayPal (faster page response times), and Walmart use Node.js for their backend services and microservices (we compare it to Golang in our article Go vs Node.js: Which One to Choose for Backend Development?). According to the 2023 Dynatrace survey, Node.js ranks as the third most popular language for Kubernetes application workloads.

Considerations: Node.js may not be ideal for CPU-intensive tasks, as heavy computations can block incoming requests and degrade performance due to its single-threaded event loop. Asynchronous programming in Node.js heavily relies on callbacks, which can lead to complex “callback hell” structures, although modern async/await syntax helps manage this complexity.

Rust

Rust’s unique ownership model guarantees memory and thread safety at compile time, virtually eliminating common vulnerabilities like null pointer dereferences and buffer overflows. This results in highly reliable and secure systems, reducing debugging time and operational costs. Rust compiles to native binaries, leading to very small binary sizes (5-10MB) and lightning-fast “cold starts”, making it ideal for serverless functions. It offers predictable performance due to the absence of a garbage collector. Rust’s modern tooling (Cargo, Rustfmt, Clippy) and strong community also contribute to its appeal.

Rust is used by major tech companies for performance-critical and security-sensitive components. AWS Lambda has integrated Rust into its runtime environment. Dropbox uses Rust for its file synchronization engine, and Cloudflare uses it in its core edge computing platform and as a C replacement. Rust is often used alongside Python and JavaScript in polyglot environments, suggesting a complementary role.

Considerations: Build times can be long, though incremental builds are faster. Its ecosystem is still maturing compared to more established languages. Rearchitecting in Rust can be agonizing as it forces upfront architectural thinking.

C#

C# combined with the .NET platform is a robust choice for cloud-native development, especially within the Microsoft Azure ecosystem. It has become fully cross-platform, supporting Windows, macOS, and Linux. C# offers automatic memory management (garbage collection), robust exception handling, and extensive built-in libraries.

.NET 8 includes optimizations for reduced memory allocation and improved efficiency. Its object-oriented design promotes modular, reusable, and scalable code, ideal for microservices. The .NET platform also provides robust tooling for testing, monitoring, and security, such as data encryption and authentication.

C# is widely used for building cloud-native solutions. Companies like Netflix, Amazon, and Microsoft have successfully adopted microservices to improve performance, flexibility, and accelerate innovation, often leveraging .NET. C# is suitable for building e-commerce applications (with microservices for user, catalog, and order management), real-time data processing apps, and IoT platforms.

Considerations: C# applications can consume more memory and processing power compared to some other languages due to the managed runtime environment. Historically, C# was tightly coupled with Windows, and while.NET Core addressed this, some might perceive it as vendor lock-in (Microsoft).

Golang

Go’s design directly aligns with the operational demands of cloud infrastructure and high-performance microservices. Core cloud-native projects like Kubernetes, Docker, CoreDNS, and Prometheus are predominantly built with Go. This is a direct consequence of Go’s strengths: efficient concurrency (goroutines), fast compilation, the ability to produce small, self-contained binaries, and strong networking capabilities.

These features are paramount for building robust, scalable, and efficient infrastructure components that manage distributed systems in the cloud. Go is not just a popular language for cloud-native; it’s the basic language of cloud-native infrastructure. Uber, SoundCloud, Twitch, American Express, and Cloudflare (for DNS, SSL, load testing) use Go for their backend services and microservices. ByteDance uses Go for 70% of its microservices.

At Rubyroid Labs, we love using Go to build high-performance backend systems, cloud-native microservices, and scalable APIs. If you’re looking for a development team with deep Go expertise, check out our Golang development services.

Considerations: In Go, errors are returned as values, and developers must explicitly check each one. In a microservices architecture, where failure points are everywhere, error management becomes both critical and cumbersome if handled in the traditional Go way. Then there’s the limited support for reflection and metaprogramming. Go deliberately avoids “magic”. Everything must be explicit. It requires more manual work, especially when building complex, modular services.

| Language | Primary Cloud Use | Key Cloud Advantages | Relevant Survey Data |

| Go (Golang) | High-performance services, system programming, infrastructure (Kubernetes, Docker) | Efficient concurrency (Goroutines), fast compilation, small binary size, memory safety | Top 2 for Kubernetes workloads (Dynatrace 2023), most planned for adoption (JetBrains 2024), strong AWS/Azure/GCP CDK support |

| Python | Web applications, ML/AI, automation, scripting, infrastructure management | Simplicity, vast library ecosystem, rapid development, cross-platform | Top 3 overall usage (Stack Overflow 2024, JetBrains 2024), strong AWS/Azure/GCP CDK support |

| Java | Enterprise applications, backend services, distributed systems, microservices | Reliability, scalability, mature ecosystem (Spring Boot), platform-independence, multithreading | Top 1 for Kubernetes workloads (Dynatrace 2023), strong AWS/Azure/GCP CDK support |

| JavaScript (Node.js) | Full-stack development, web applications, APIs, serverless functions, real-time | Unified stack, event-driven architecture, non-blocking I/O, scalability | Top 3 for Kubernetes workloads (Dynatrace 2023), strong AWS/Azure/GCP CDK support |

| Rust | System programming, high-performance services, security, serverless functions | Memory safety, thread safety, high performance, no garbage collector, small binary size | High interest in adoption (JetBrains 2024), actively used in AWS Lambda, Cloudflare |

| C# (.NET) | Enterprise applications, web applications, microservices, Microsoft Azure | Cross-platform (.NET Core), rich ecosystem, performance, Windows integration | Strong AWS/Azure/GCP CDK support, actively used for cloud solutions |

The analysis reveals that cloud-native development thrives on a polyglot approach, allowing organizations to select the most suitable tools for specific tasks. There is no single “best” language, but rather a set of leading languages, each with its unique strengths and application areas.

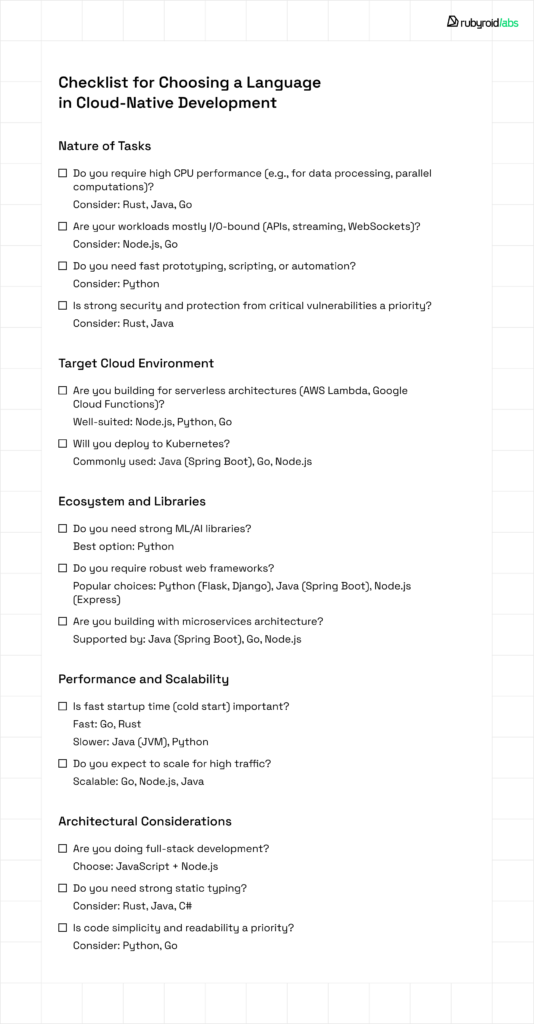

For technical leaders, the strategic decision lies in embracing this polyglot paradigm. This means building teams with diverse expertise, investing in platform engineering to manage complexity, and continuously evaluating new languages and frameworks based on specific project requirements rather than blindly following general popularity. To support this approach, use the checklist below to guide language selection in cloud-native development:

Why Developers Choose Go

Golang (Go) was designed as a response to the growing complexity and sluggishness of existing tools used to build scalable, distributed systems. Google engineers set out to create a language that combined the performance of compiled languages with a clean syntax and built-in support for concurrency. The result is a minimalist yet powerful language, free of unnecessary complexity.

For a closer look at Go’s key strengths and insights from our VP of Engineering, read the full article: What Is Go Programming Language.

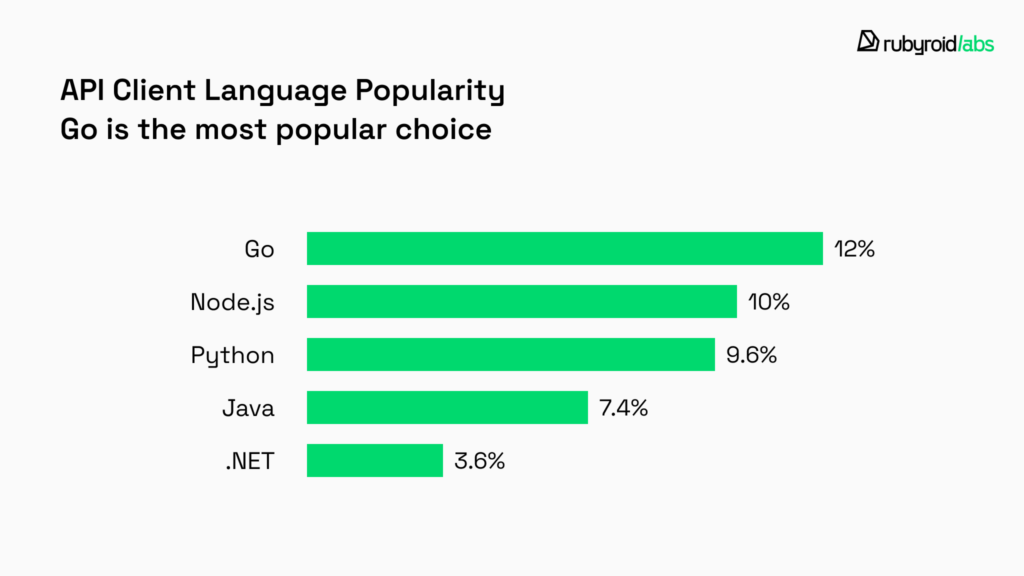

Today, Go is seeing strong momentum in cloud development. According to Cloudflare’s 2024 API Client Language Popularity report, Go is now the most popular choice for building automated API clients, responsible for around 12% of all automated API requests. Given that over half of all dynamic traffic on Cloudflare’s network is API-related, and that much of it comes from non-browser clients, this is a strong signal of Go’s growing influence in modern cloud-native ecosystems.

So why does Go work so well in cloud environments? Let’s take a closer look at the language features and ecosystem strengths that help teams build fast, scalable cloud applications.

Ability to Compile Quickly and Deploy Easily

When developers write code in Go, it goes through a process called compilation, where the code is translated into a static binary – a single, self-contained file that includes everything needed to run the program (all the code, libraries, and dependencies). Unlike many other languages, Go doesn’t require a separate runtime environment to be installed on the system where the app will run.

With Go, once the code is compiled into a binary, you can take that file and run it almost anywhere, regardless of the underlying system or cloud provider. This is what people mean when they say: “build once, run anywhere”. This model is especially helpful in Cloud-Native environments.

Cloud-Native assumes that apps often run inside containers (lightweight, isolated environments that include the app and everything it needs to run). Because Go binaries are compact and don’t have external dependencies, the resulting container images (the packages used to run software in the cloud) are much smaller than those made with many other languages. Smaller images are faster to build, faster to deploy, and easier to secure.

Parallel Task Handling

Concurrency is the ability of a program to handle multiple tasks simultaneously. Go was designed with concurrency in mind from the very beginning. It doesn’t require complex tools or third-party libraries to manage concurrent tasks. Instead, Go includes its own simple and efficient solution: goroutines.

A goroutine is a lightweight version of a thread (a basic unit of work that a computer runs). You can easily launch thousands of them without slowing down your system or using a lot of memory, which is perfect for Cloud-Native workloads, where constant communication between services are common.

Cloud-Native applications often need to handle a large number of requests at the same time. Take a typical web API for an online store: every time a user visits the site, the backend might need to fetch product details, check stock availability, calculate shipping options, and apply discounts, all in parallel.

With Go, each of these tasks can run in its own goroutine, so they don’t block one another. Channels help coordinate the results and return a response quickly and reliably.

Rich Package Support For Any Use Case

Cloud-native systems rely on communication between microservices and external APIs. Go addresses this through its standard library. The net package supports network connections, data transmission, and request handling, which are critical for building distributed services across servers, clusters, or cloud platforms.

Built-in HTTP support (protocol used for communication across the web and between Cloud-Native services), via the net/http package, makes it easy to expose APIs and service endpoints without external dependencies. This is essential in microservices architecture, where services often interact through RESTful APIs.

Another critical component is the ability to process data in commonly accepted formats. JSON is the most widely used one for exchanging data. Go’s encoding/json package simplifies both serialization (converting Go data into JSON) and deserialization (parsing JSON into Go data structures). This native support eliminates the need for third-party libraries.

Beyond that, the standard library includes packages like io and os for file and environment operations, sync for concurrency control, and context for managing timeouts and cancellations in distributed services. All these capabilities are essential for operating reliably at scale.

Adherence to DevOps Standards

DevOps is a practice that enables faster software delivery with greater reliability and automation. As we already have mentioned, Go compiles directly into a static binary, which facilitates containerization, a core concept in DevOps.

Kubernetes (a system for managing and scaling containers across clusters of machines), automates tasks like deploying new versions, balancing traffic between services, and recovering from failures. Because Go applications are compiled and container-ready, they’re straightforward to deploy and orchestrate in Kubernetes environments.

Monitoring is also essential in Cloud-Native systems. Go integrates smoothly with Prometheus, a popular metrics tool, thanks to native libraries that expose application metrics in the easily consumed format. This allows teams to track performance and respond quickly if something goes wrong.

Cloud-Native Architecture: When It Adds Maximum Value

By now, we’ve examined what cloud-native development entails, why Go has gained such strong traction in this space, and where its limitations lie. We’ve even compared it with other popular languages to understand when Go offers real strategic advantages.

Yet technology decisions don’t happen in a vacuum. Beyond abstract metrics or language design choices, much depends on how well a tool aligns with conditions like team experience, infrastructure demands, release cycles, and business goals.

That’s why the next logical step is to explore concrete scenarios where adopting a cloud-native architecture is not just viable but particularly advantageous.

You Have A Team Experienced in Other Tech Stacks

When a team enters the cloud-native space with strong backgrounds in other technologies, the challenge is not a lack of engineering skill, but the shift in architectural thinking.

Cloud-native is not defined by language choice, it’s a paradigm for building distributed, resilient, and scalable applications. This model often involves containerized microservices, infrastructure-as-code, and continuous integration/continuous deployment (CI/CD) pipelines.

In this scenario, cloud-native architecture allows teams to build incrementally, starting small while aligning with modern infrastructure standards. Experienced developers can reuse familiar patterns from traditional stacks, gradually adopting orchestration tools like Kubernetes and container runtimes like Docker. This smooth learning curve helps teams bridge the gap between legacy systems and future-ready platforms.

You Want to Build an Application Where Components Must Scale Independently

Scalability is one of the core reasons teams move to cloud-native systems. Unlike monolithic applications, where scaling requires replicating the entire app, cloud-native architecture embraces fine-grained scalability.

By designing systems as loosely coupled microservices, each component can scale based on its own traffic and performance requirements. A recommendation engine under load doesn’t require scaling the user authentication system; each module operates with its own compute resources, autoscaling policies, and failure boundaries.

Container orchestration platforms like Kubernetes make this model practical. They enable automated scaling, health checks, and traffic routing at the level of individual services. The result is a system that adapts in real time to changing demand, while optimizing infrastructure use.

You Need Systems Where Individual Functions Must Be Released Without Full Redeployment

Modern product development requires the ability to deliver features fast, without compromising system stability. In traditional monoliths, even small changes often require full-system redeployments.

Cloud-native systems solve this by isolating functionality into independently deployable units. Microservices can be updated, rolled back, or replaced without affecting the rest of the system. This enables safer release cycles, frequent deployments, and faster recovery when issues arise.

To support this model, the underlying tooling must prioritize speed and predictability. Languages like Go, with fast build times and static binaries, complement this architecture by making packaging and deployment faster and more reliable. But the primary benefit lies in the architecture itself – decoupled, flexible, and built for iteration.

You’re Building Strategic Enterprise Systems That Require Ongoing Development

Enterprise systems are rarely static. They evolve continuously to meet changing business needs. This calls for architectures that can adapt over time, without becoming overly complex or brittle.

Cloud-native design provides the necessary modularity and lifecycle flexibility. Systems are decomposed into services that reflect business domains, making it easier to assign ownership, evolve functionality independently, and introduce new capabilities.

Governance and maintainability are also critical. Tools that enforce clear dependency management, observable behavior, and minimal configuration overhead reduce technical debt over the long term. While Go contributes with its simplicity and maintainability, the real strength comes from the cloud-native structure that supports change without disrupting the whole system.

You’ll Need Constant Updates

Frequent, reliable releases have become the norm in competitive software environments. Cloud-native enables this rhythm by integrating automation, testing, and deployment into the heart of the development cycle.

CI/CD pipelines, combined with containerization and orchestration, allow teams to release updates daily or even hourly. Small, focused services reduce the blast radius of changes, while observability tools and rollback strategies mitigate risk.

Consistency across environments is key. Cloud-native architecture promotes immutable infrastructure, where each deployment is predictable and reproducible. Languages like Go, which compile into static binaries and avoid runtime surprises, align well with this philosophy, but again, it’s the architecture that makes fast, confident releases possible.

In all of these scenarios, the benefits of cloud-native development are tied not to hype, but to real engineering outcomes: modularity, resilience, adaptability, and speed. While Go is a natural fit for these goals, the guiding force is the architecture itself – one designed for a cloud-first, change-driven world.

Conclusion

To comply with the standards of the cloud environment, the language must fulfill at least three essential criteria: swiftness and effectiveness, efficiency in distributed systems, and minimal overhead for DevOps. Golang is a language that meets all the requirements and is therefore used by many teams by default.

It compiles down to single binaries that are simple to ship in containers, handles concurrency with ease thanks to goroutines, and works seamlessly with tools like Kubernetes, Docker and Prometheus. It’s not perfect (no language is), but Go’s clean design and focus on simplicity make it an ideal solution for modern container environments.

Experienced teams use a multilingual approach. By choosing languages based on technical requirements (rather than trends), they reduce complexity, increase developer productivity, and create more resilient systems.

Want to explore what Go can do for your product? With Go, we assist teams in crafting highly scalable, cloud-native solutions, and we’d love to hear what you’re working on.