The goal of neuromorphic computing is to physically emulate the brain’s neuro-synaptic organization in computer hardware. This bio-inspired approach achieves radical improvements in computational efficiency, most notably in energy consumption and speed.

At Rubyroid Labs, our AI specialists, who integrate advanced AI solutions into products, explain why neuromorphic computing has become feasible today, how it is structured, and what practical applications we can expect in the near future.

Contents

- Why neuromorphic computing is gaining traction

- How neuromorphic computing works

- What are the practical applications of neuromorphic computing?

- How can neuromorphic computing shape future product strategy?

- Conclusion

Why neuromorphic computing is gaining traction

The concepts behind neuromorphic computing have existed since the 1980s, but the field is experiencing a surge in interest and investment in 2025. Why?

First, the energy cost of training and deploying large-scale AI models has reached unsustainable levels. For example, training a model like GPT-4 can consume hundreds of thousands of kilowatt-hours of electricity. This amount of energy would be sufficient to power between 50 and 150+ average households for an entire year. Neuromorphic computing is seen as a pathway to a more sustainable, “green” AI.

Second, the gadgets and applications we use daily demand ultra-low latency and minimal power consumption. Neuromorphic computing is the ideal architecture for edge AI, as it offers high performance while operating within the constrained power budgets of battery-operated devices.

Third, decades of progress in semiconductor manufacturing have enabled the creation of complex neuromorphic chips that house millions of artificial neurons and synapses. Simultaneously, breakthroughs in materials science are yielding novel components that emulate the brain’s neural connections more effectively than standard transistors.

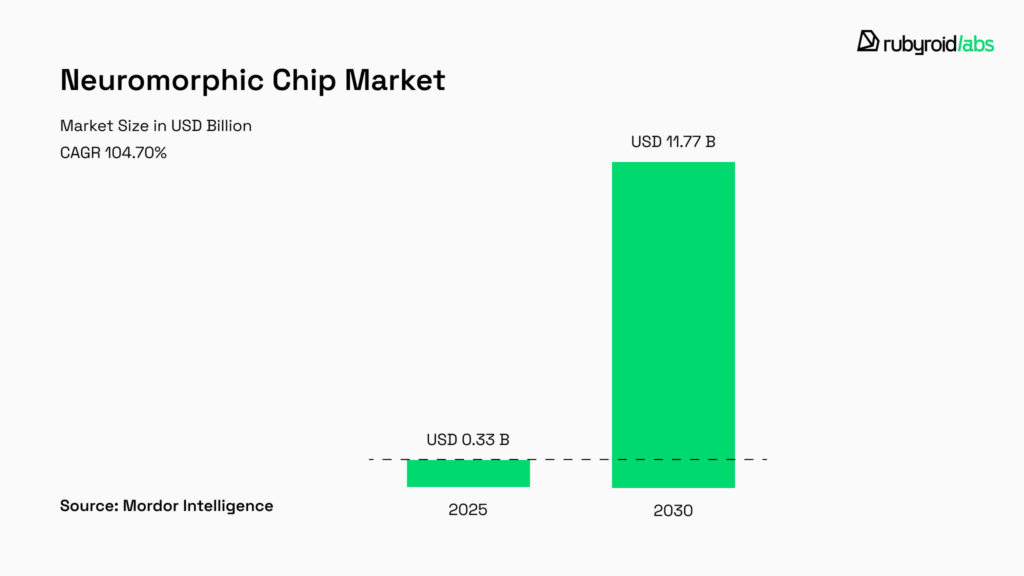

It is no surprise that neuromorphic chip market analysis reports project an exceptional growth rate of 104.70% by 2030. The management consulting firm Gartner has identified neuromorphic computing as one of the most important upcoming technologies for businesses.

How neuromorphic computing works

Since neuromorphic computing is inspired by the brain, it directly models biological mechanisms using spiking neural networks (SNNs). These networks are composed of artificial neurons and synapses that mimic their biological counterparts.

In an SNN, each neuron has a charge, threshold, and delay. It accumulates charge over time, and only when this charge meets a specific threshold does it “spike” sending a signal through its synaptic connections. If the threshold isn’t met, the charge dissipates.

Synapses, represented by transistor-based devices, form the pathways between neurons. They have weights and delays that can be programmed, and often include a learning component, allowing them to adjust their strength based on network activity.

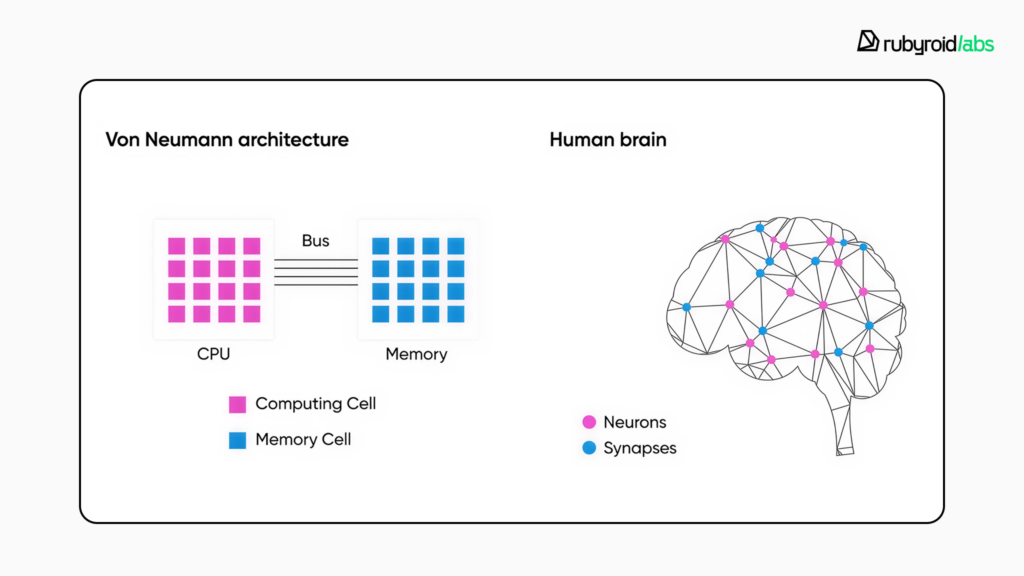

The core principles of neuromorphic computing set it apart from the von Neumann model that nearly all current computers are built upon.

Co-location of memory and processing. Conventional computers move data between a processor (CPU/GPU) and memory (RAM or storage). That transfer limits speed and consumes energy. Neuromorphic systems place memory and compute in the same physical device. That means less data movement, lower latency and far better energy efficiency.

Shift from a clock-driven to an event-driven model. In conventional computing, a global clock drives processors to execute instructions continuously, expending energy regardless of the data’s usefulness.

Computation in neuromorphic systems occurs only in response to “spikes” from other artificial neurons. This means that only the parts of the network processing new information consume significant power, while the rest of the system remains in a low-power idle state. This is the primary source of energy efficiency.

Parallelism. The human brain achieves its remarkable capabilities through the parallel operation of approximately 86 billion neurons. Neuromorphic systems replicate this principle by employing a large number of interconnected processing units (the artificial neurons).

Instead of executing tasks sequentially, a neuromorphic chip distributes the computational load across millions of parallel units.

| Neuromorphic computing | Von Neumann architecture (CPU) | |

| Processing model | Asynchronous, event-driven | Synchronous, sequential (at instruction level) |

| Memory & processing | Co-located (in-memory computing) | Physically separate (CPU & RAM) |

| Data flow / unit | Sparse, temporal spikes | Data & instructions via bus |

| Energy profile | Ultra-low power (milliwatts) | Moderate to high power (watts) |

Are neuromorphic computing and artificial neural networks the same?

Neuromorphic computing and artificial neural networks (ANNs) are both brain-inspired but distinct technologies.

ANNs are software algorithms widely used in deep learning that run on generic von Neumann-based hardware, most often graphics processing units (GPUs), which are highly optimized for dense matrix multiplications.

Neuromorphic computing is based on co-developing hardware and software for spiking neural networks, which replicate the brain’s biological mechanisms to enable extremely efficient information processing.

| Neuromorphic computing (with SNNs) | Conventional ANNs (on GPUs) | |

| Processing model | Asynchronous, event-driven | Synchronous, parallel (at core level) |

| Memory & processing | Co-located (in-memory computing) | Physically separate (GPU & high-bandwidth memory) |

| Data flow / unit | Sparse, temporal spikes | Dense matrix/vector operations |

| Energy profile | Ultra-low power (milliwatts) | Very high power (hundreds of watts) |

What are spiking neural networks?

As previously mentioned, the computational foundation of neuromorphic systems is built on spiking neural networks.

SNNs represent the third generation of neural networks. They differ by encoding information in the timing and frequency of discrete spikes instead of processing continuous values from static data. This time-based approach makes them particularly suitable for processing dynamic data.

This capability creates a natural synergy with novel event-based sensors used in dynamic vision cameras. Like the human retina, these sensors do not capture full frames but output a stream of events only where brightness changes occur. Because events are reported in real-time, there is no motion blur in such cameras, unlike with traditional ones where motion can blur the image between frames.

Consequently, the rise of neuromorphic computing is fueling an evolution in sensor technology.

What are the practical applications of neuromorphic computing?

According to IBM, a leader in the neuromorphic chip market, the current practical applications of neuromorphic systems remain limited.

However, their most promising ones lie in autonomous vehicles, cybersecurity, edge AI, pattern recognition, and robotics.

Automotive industry

Neuromorphic processors are ideally suited for advanced and autonomous driver assistance systems due to their ability to process multiple asynchronous data streams with ultra-low latency.

This enables critical computations to be performed directly in the vehicle, eliminating risks associated with cloud connectivity. Furthermore, their high energy efficiency helps extend the range of electric vehicles.

Reflecting this growing focus, Mercedes-Benz recently spun off its Silicon Valley engineering team into a separate company, Athos Silicon, dedicated to developing next-generation automotive chips.

Cybersecurity, aerospace and defense

Neuromorphic systems can be used to detect behavioral anomalies in networks. For autonomous drones and robots, they can enable navigation and decision-making in complex environments without relying on GPS. The technology can also be effective for signal processing in satellite communications and electronic warfare systems.

Edge AI

The field offers a promising path to decentralize large language models (LLMs). By running smaller, efficient LLMs directly on devices, neuromorphic computing could enable offline, private AI interactions, leading to personalized assistants that operate without constant cloud connectivity.

Smart sensors & IoT

In consumer devices, neuromorphic chips enable “always-on” features without rapidly draining the battery. In industrial IoT, they can be used for predictive maintenance, analyzing sensor data for early anomaly detection.

The combination of event-based cameras with neuromorphic processors creates efficient systems for robotics and surveillance that process only scene changes.

Healthcare

The low-power, real-time analysis capabilities of neuromorphic chips make them ideal for personalized health monitoring and advanced prosthetics. These systems enable continuous biosignal monitoring directly on wearable devices without transmitting confidential data to the cloud.

The technology can also be used to create adaptive prosthetics that interpret neural signals for more natural control, and to accelerate the analysis of complex medical images.

What challenges hinder the adoption?

Programming for neuromorphic systems requires a completely new mindset. Currently, only a limited number of experts possess the cross-disciplinary knowledge required to design, train, and deploy such brain-like AI systems effectively. Only well-funded research labs and tech companies can afford to experiment in this field.

Another major hurdle lies in training spiking neural networks. Researchers often convert pre-trained artificial neural networks into SNNs, but this suboptimal process can lead to accuracy loss. Furthermore, the lack of standardized evaluation criteria makes it difficult to compare different neuromorphic systems or objectively measure their advantages over traditional architectures.

However, as research accelerates and investment in the field grows, frameworks are evolving and more engineers are becoming fluent in this new computational paradigm. Its broader implementation is consequently becoming a question of time.

Meanwhile, catch up on the present-day impact of AI in our piece: How GPT-5 and other language models are changing business and education.

How can neuromorphic computing shape future product strategy?

Traditional AI is reaching its limits due to persistent latency, growing cloud expenses, and an unsustainable environmental impact. By sending intelligence straight to the source, neuromorphic computing disrupts this cycle.

This shift enables a fundamental change in business models. Cloud subscriptions could be supplemented (or even replaced) by neuromorphic hardware. This opens new monetization paths, enhances data governance, and enables smoother, safer user experiences.

Companies that rely solely on GPU-cloud architectures risk being overtaken by competitors leveraging neuromorphic computing. They’ll simply be able to offer superior intelligence with lower power consumption, extended battery life, and uncompromising on-device privacy.

Ultimately, neuromorphic computing promises entirely new product categories that operate for weeks on a single charge, and process sensitive data entirely offline.

Conclusion

Neuromorphic computing represents one of the most promising shifts in artificial intelligence as it offers capabilities that conventional architectures cannot match:

- Near-zero latency

- Ultra-low power consumption

These qualities are vital for automotive, cybersecurity, edge AI, IoT, and healthcare.

Although practical applications remain limited today, a major shift is projected by 2030. Over the next five years, industry leaders are expected to make significant technological advances and consolidate their market positions, paving the way for novel services uniquely enabled by neuromorphic computing.

Companies that start leveraging neuromorphic architectures at the earliest opportunity will be better positioned to reduce infrastructure costs, enhance data privacy, and deliver next-generation user experiences.

As a company that integrates cutting-edge technologies into client products, Rubyroid Labs is closely monitoring these developments.