Nearly three-quarters of the 400+ corporate leaders surveyed by Insight Enterprises believe AI assistants will reshape their industry within three years. The question is: will they reshape your product first, or your competitor’s?

In the following sections, we’ll show how Ruby on Rails makes integrating AI assistants seamless, highlight the use cases across industries, share examples of how our clients have leveraged AI assistants to strengthen their products, and provide practical advice on how to avoid common pitfalls during integration.

Contents

- AI in Ruby on Rails Software: How to Build Intelligent Apps without the Extra Complexity

- How AI Assistants Strengthen Ruby on Rails Applications

- Case Studies: AI Assistants in RoR Projects

- AI Models That Pair Well with RoR

- Which AI Model to Choose for Your Rails Application

- Conclusion

AI in Ruby on Rails Software: How to Build Intelligent Apps without the Extra Complexity

Python is the industry leader for developing and training ML models. However, when you’re adding AI features to a product without training your own models, the “best language” depends on your stack and needs. In those cases, you can integrate off‑the‑shelf APIs from your existing stack, such as Ruby on Rails. Many modern apps rely on open‑source or third‑party providers and integrate via simple HTTP APIs, so you can often stay in your existing stack and orchestrate AI through API calls.

As a company that specializes in Ruby on Rails services, we assure: if your software is built on Ruby on Rails, you don’t have to spin up a separate AI service in Python to connect to these models. Your RoR codebase can incorporate AI capabilities thanks to a robust gem ecosystem (langchainrb, ruby-openai, ruby-gemini, and anthropic-sdk-ruby).

How Ruby Gems Unlock AI in Rails

We’ll examine langchainrb as an example. This framework connects AI models and Rails. Without losing the convenience of Ruby, it enables you to include natural language processing into applications. After connecting langchainrb to your preferred provider (OpenAI, Google Gemini, Anthropic Claude, etc.), you should specify how your application should utilize the model. Setting it up feels much like adding any other Rails feature. You install the gem, configure it with your API keys, and then call the model through Ruby methods.

Sidekiq and other background job processors manage resource-intensive AI activities without interfering with your primary application’s functionality. It is quick and easy to test and iterate on new AI features thanks to Rails’ convention-over-configuration methodology.

The functionality you can get depends on the provider you choose, and you’re not limited to just one. A Rails application can integrate several models at once. This setup expands the scope of what your app can do.

So what kind of AI functionality can Rails bring to life?

- Conversational interfaces powered by large language models;

- Recommendation systems that adapt to user preferences;

- AI-enhanced search with semantic understanding;

- Image generation;

- Automated code generation and testing.

Several AI talents can be integrated into a single interactive tool — an AI assistant. In comparison with traditional chatbots, which follow prescribed scenarios, AI assistants can ask clarifying questions, provide recommendations, and recognize emotions, responding with more empathy. They are able to connect to multiple systems and applications, and with the support of machine learning continue to improve over time.

How AI Assistants Integrate into Rails Applications

Bringing an AI assistant to a Rails application means opening a channel between your app and a reasoning engine on the cloud. The assistant itself lives outside your Rails codebase and runs on OpenAI, Google AI, DeepSeek, or other platforms. Your application sends user input to the model through an API call, then receives the model’s output and presents it back inside your interface.

Ruby-openai and related libraries handle the details of making API requests and returning results. Frameworks such as langchainrb or BoxCars enable combining multiple prompts into a sequence, storing context from previous conversations, and providing the model with access to additional tools. Gems like openai-toolable make it possible to expose Ruby methods as functions the AI can call.

This transforms the assistant from a simple text generator into an actual agent capable of performing actions. It can be embedded as live chat on your support page or as a side panel that analyses data and offers insights. From a technical point of view, it’s still the same pattern — Rails collects the user’s message, sends it to the model, and returns a response. But the effect on the end user is a conversation with software that feels intelligent and empathetic.

How AI Assistants Strengthen Ruby on Rails Applications

The potential of incorporating AI-powered assistants into Rails apps is still overlooked by many decision-makers. Our tech team wants to bring clarity to this topic and highlight why these assistants are not a passing trend.

Market Overview: Adoption of AI Assistants

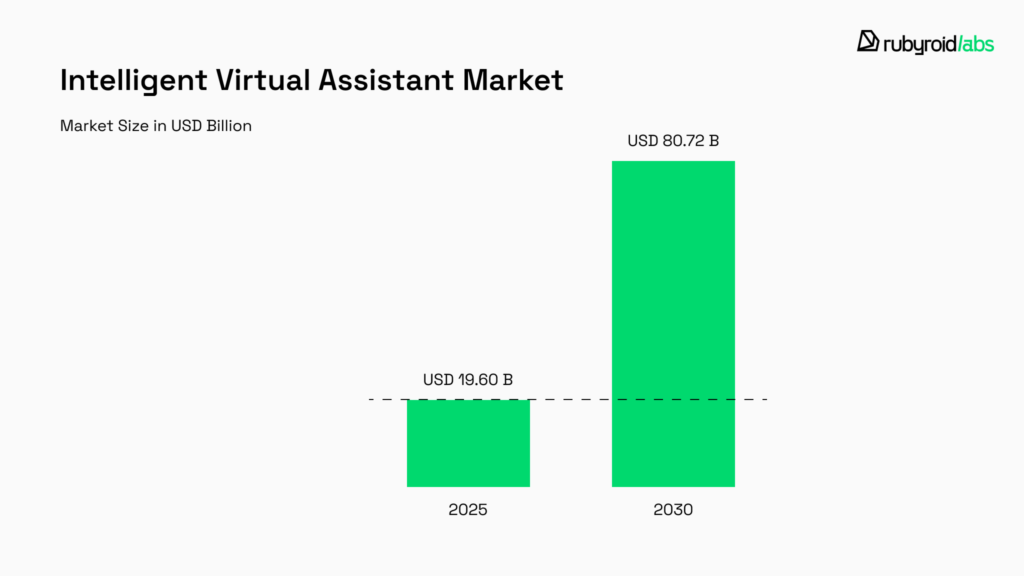

If we look at recent data, the Intelligent Virtual Assistant market is projected to reach over USD 80 billion by 2030, growing at a CAGR of 32.72%. Such dynamics show that assistants are quickly becoming an integral part of business strategies.

The surge began during the pandemic. According to the Smart Audio Report, 77% of US adults adapted their daily routines during COVID-19, and many turned to voice assistants for convenience and even emotional support. This shift revealed a deeper truth: people are willing to trust and rely on virtual assistants when circumstances push them to seek new ways of interaction.

From our perspective, these numbers reflect a broader trend. Enterprises see assistants as a way to:

- enhance customer experience without scaling support teams linearly;

- deliver personalized interactions at scale;

- improve operational efficiency.

In retail and e-commerce, they drive personalized shopping journeys. In banking and finance, they streamline customer support and improve transaction security. In healthcare, they support patients with scheduling, medication tracking, and chronic disease management.

This aligns with what we observe in Rails-based projects: organizations explore assistants for real impact on retention, conversion, and customer satisfaction. Each year, businesses intensify their efforts to exceed customer expectations by integrating increasingly sophisticated technologies to achieve that goal. One of the most decisive directions is the shift toward hyper-personalization in client interactions, which we analyzed in detail in our recent article Why B2B Interfaces Will Go Hyper-Personal by 2027.

Given this, AI assistants serve as a structural component of future customer experience strategies. Their adoption is part of a systemic transformation that will define enterprise practices in the coming decade, and it’s surely not just a short-lived trend.

AI Assistant Use Cases & Value by Industry

It is evident how strategically important AI assistants are when positioned within the larger trend toward hyper-personalization. To show how this actually works, we’ve mapped the sectors where assistants are already delivering tangible benefits to both end-users and the companies that integrate them.

| Industry | Key Use Cases | Value for End Users | Value for Businesses |

| Retail & E-commerce | Personalized shopping guidance, product recommendations, order tracking | • Personalized shopping recommendations • Faster product discovery • Clear guidance during checkout • Real-time order help | • Increased average order value through upselling & cross-selling • Reduced cart abandonment rates • Higher conversion from returning customers • Improved ROI from marketing campaigns due to more precise targeting |

| Banking & Finance | Customer support, fraud detection, financial advisory | • Real-time answers on transactions and account issues • Personalized financial advice • Higher trust through proactive fraud alerts | • Lower operational costs by automating support • Faster loan approvals and account setups • Reduced fraud losses through AI-driven alerts • Higher customer retention |

| Healthcare | Appointment scheduling, medication reminders, patient support | • Instant access to medical FAQs • Guidance on scheduling and prescriptions • Personalized reminders for treatment adherence | • Reduced no-shows through automated scheduling reminders • Lower administrative costs via AI triage • Faster patient onboarding increases throughput |

| Real Estate | Virtual property tours, scheduling viewings, answering buyer questions | • Instant property recommendations • Answers to location, price, and amenities questions • Guided scheduling for property visits | • Faster sales cycle due to reduced time-to-decision • Lower acquisition costs per lead through automation • Higher deal closure rates improve commission margins • Improved ROI from marketing through more precise lead nurturing |

| Travel & Hospitality | Travel planning, booking changes, local recommendations | • Tailored itinerary suggestions • 24/7 multilingual booking support • Quick problem resolution during travel | • Increased booking volume via personalized offers • Reduced customer churn through real-time issue resolution • Fewer call center agents • Higher margin per booking by upselling add-ons |

| Manufacturing & Logistics | Equipment monitoring, supply chain coordination, workforce assistance | • Real-time order tracking • Transparency in delivery timelines • Simplified support for B2B clients | • Reduced downtime with predictive issue resolution • Faster supply chain coordination lowers costs • Lower customer service costs in B2B operations • Improved ROI from streamlined logistics management |

| Education | Tutoring, progress tracking, answering student questions | • Adaptive learning paths • On-demand tutoring support • Progress tracking and personalized feedback | • Scalable delivery of courses without linear growth in staff costs • Lower dropout reduces lost revenue per learner • Faster content personalization improves ROI on learning platforms |

| HR & Recruitment | Candidate screening, interview scheduling, onboarding support | • Faster responses during hiring • Smoother onboarding experience | • Time-to-hire reduction and lower agency spend • Fewer no-shows and scheduling conflicts |

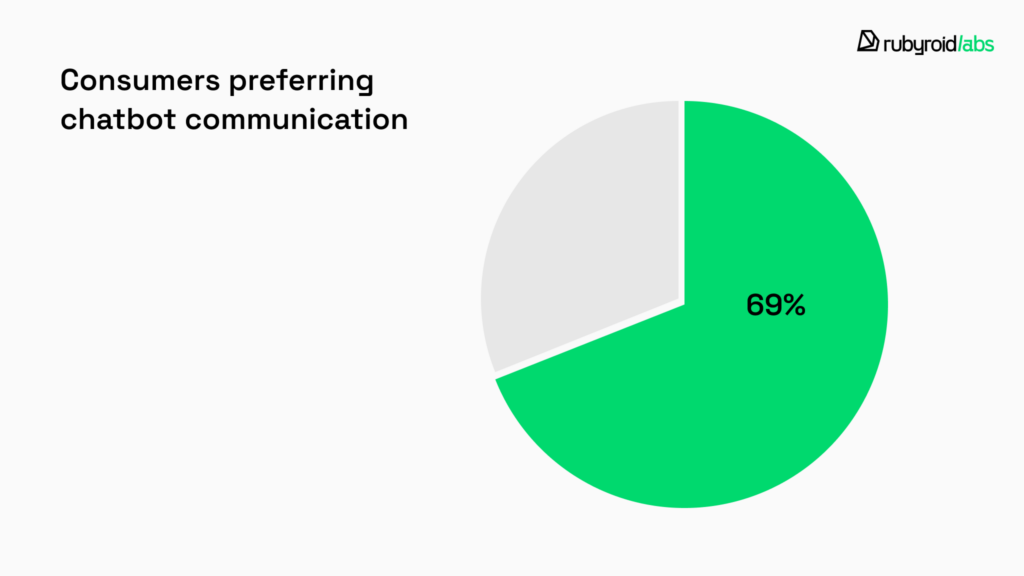

Studies consistently show that the introduction of conversational and task-oriented assistants reshapes customer expectations and financial outcomes. For example, Salesforce reports that 69% of consumers now prefer chatbots for quick brand communication.

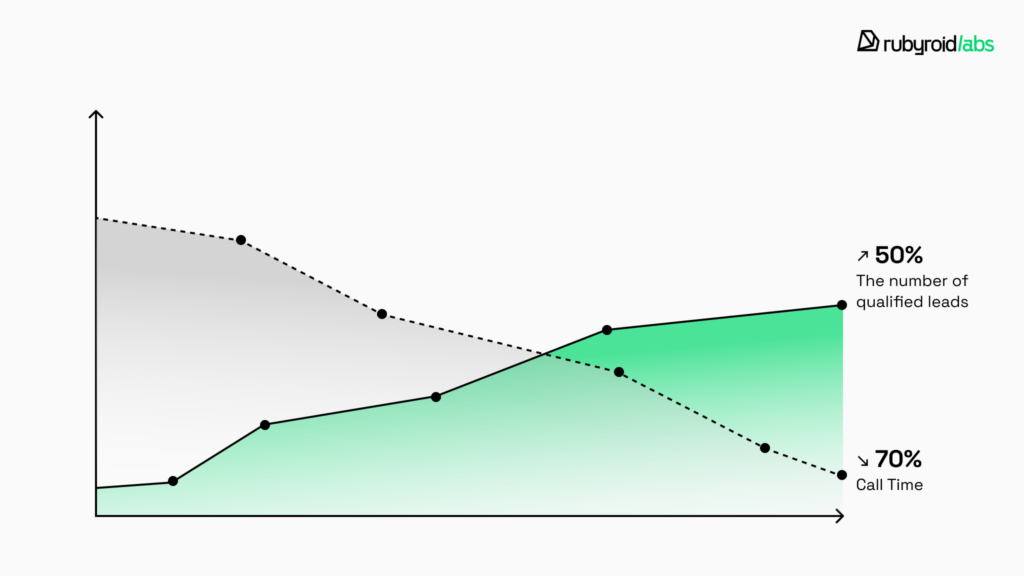

Research by Harvard Business Review highlights that sales teams leveraging AI can increase qualified leads by 50% while simultaneously cutting call time by up to 70%.

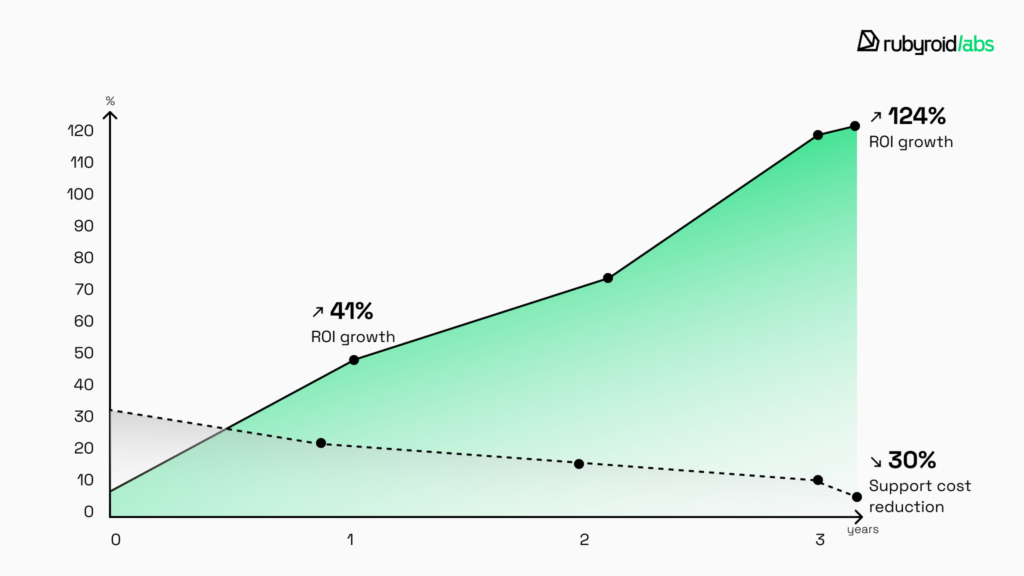

In customer service, AI adoption brings overall support cost reductions of up to 30%. Companies report a 41% ROI in the first year, which grows to 124% by year three. This means that investing in AI-powered assistants is a step toward optimized customer service spending.

The ability of AI assistants to connect customer and company interests is their primary value. Higher business productivity and profits meet personalized consumer convenience. Long-term sustainability is the result of this equilibrium.

Case Studies: AI Assistants in RoR Projects

In the previous chapter, we explored different industries and use cases for AI assistants. Now, we’d like to share how these technologies work in practice. By looking at real projects completed by our team, we’ll show how AI-powered assistants helped our clients enhance their products and create new value for users across three very different industries.

AI Assistant for Bike Fitting and Sizing

Myvelofit is an online platform for cyclists that helps users choose the right bike and adjust it to their individual body parameters. The client came to us with a request to integrate an AI assistant that could simplify the bike fitting process.

Our team developed a chatbot powered by the OpenAI API, which we integrated into the product in two weeks. The AI assistant performs two main functions.

First, bike fitting: users upload a photo or video, answer questions about their preferences, and the assistant suggests suitable bike models based on body parameters and riding style.

Second, bike sizing: the system analyzes user videos to identify potentially harmful positions and provides precise recommendations. For example, adjusting the saddle height by a few centimeters or changing the handlebar angle.

After testing the MVP, we refined the response logic, improved data processing speed and accuracy, and built a seamless interface so the chatbot felt like a natural part of the platform. As a result, the client received a fully functional virtual assistant that became an integral feature of the product, increased subscription value, and defined a clear direction for the future development of the service.

AI Assistant for Insurance Brokers

CoverageXpert is an online platform that aggregates information about commercial insurance products to help gain a deeper understanding of insurance terms and conditions. The client approached us with the task of creating an app that would simplify this process.

Our specialists integrated a ChatGPT-powered business assistant into the system. The assistant generates instant and contextually relevant explanations of insurance terms and product details, drawing on the client’s proprietary database. This feature enables brokers and clients to quickly clarify complex insurance language and find the right information without manually searching through lengthy documents.

Later, we expanded the functionality with a Gemini-based tool that analyzes coverage packages and identifies gaps that might otherwise go unnoticed. This innovation significantly reduces the risk of missed details in critical insurance agreements.

Thanks to the integrated AI assistants, the platform has become a valuable tool. Today, CoverageXpert operates as a B2B solution with several enterprise clients already onboard.

AI Assistant for Fitness App

NNOXX is a US-based wellness and fitness company known for its wearable device that measures muscle oxygenation and nitric oxide levels in the blood during workouts. While working with sports clubs, the client noticed that athletes often pushed beyond their physical limits during intensive training, which led to long recovery periods. The client’s mission was to reduce the percentage of injuries.

However, the client needed more than just a device — they needed a digital solution capable of analyzing and translating raw data into actionable insights. That’s when Rubyroid Labs, a Ruby on Rails development company, joined the project.

Our team developed a smart web application that collects and processes information from the biosensor. To further enhance the product, we integrated AI-assistant. It delivers personalized training recommendations based on real-time biosensor data, and ensures safe, efficient, and optimized workout plans.

With the launch of the NNOXX app, the client created the first platform to combine advanced biosensor technology with AI-powered analytics. The app has gained recognition across major American sports leagues — NBA, MLB, NHL, and NFL.

AI Models That Pair Well with RoR

Now let’s consider the models we recommend for integrating an AI assistant into your RoR application. Our focus falls on OpenAI’s GPT-5, Google’s Gemini 2.5 Pro, Anthropic’s Claude 4 (Opus and Sonnet), Meta’s open-source LLaMA 4, and DeepSeek’s R-series. We lean on benchmark performance and our experience to guide our choices.

OpenAI GPT-5

GPT-5 ranks among the very top in benchmark aggregators like LMArena, where it stands out for reasoning, code generation, and domain-specific analysis. The model combines speed with a “deep thinking” mode, which makes it equally effective for everyday conversations and multi-step problem solving.

GPT-5 can handle quick, repetitive queries or support longer dialogues that require contextual understanding. Its capacity for very large inputs (up to 400K tokens) makes it especially suitable for assistants that process documents or large datasets.

Pricing places it at the higher end. $1.25 per million input tokens and $10 per million output tokens. ChatGPT integration is more expensive than open-source options like LLaMA-4 or DeepSeek, but in practice the reliability and accuracy often reduce the need for corrections, which pays off in long-term use. OpenAI also offers “mini” and “nano” variants for cost-sensitive projects, as well as enterprise Pro access.

Anthropic Claude 4

Claude 4, especially the Opus variant, is widely praised for clarity of responses and its ability to handle long-form reasoning with a natural, almost conversational tone. In benchmarks it ranks just behind GPT-5, but it often wins favor in user ratings for being easier to interact with and less prone to misinterpretations.

For assistants, Claude 4’s strength is trustworthiness in dialogue. It can manage long threads of context (up to 200K tokens), which makes it a solid choice for customer support systems or knowledge-heavy internal tools. Sonnet, the lighter version, offers reduced latency and cost, which makes it more useful for assistants that need fast turnaround on high volumes of queries.

Pricing is moderate. Opus sits close to GPT-5 levels, while Sonnet is significantly cheaper.

Google Gemini 2.5 Pro

Gemini 2.5 Pro is Google’s most capable closed-source model to date. Benchmark tests consistently show its strength in reasoning and multimodal tasks. It handles tasks that involve structured data, live search, or information pulled from Google’s services.

The model is particularly effective at retrieval-augmented generation, where it can combine its own reasoning with up-to-date information. This makes it a strong candidate for assistants in industries that depend on accuracy and timeliness, such as finance or healthcare.

Pricing is competitive. Around $0.50 per million input tokens and $1.50–$2 per million output tokens, depending on the tier. This lowers Gemini’s cost relative to GPT-5, while maintaining comparable or even superior benchmark scores in several categories.

DeepSeek R-series

The DeepSeek R-series is the newest entrant to gain attention, largely because of its cost-to-performance ratio. It performs reliably across general tasks and is designed to be cheaper to run at scale.

DeepSeek’s low cost without compromising quality is advantageous for customer-facing bots that process thousands of requests per day. Its open availability and low per-token cost (often a fraction of GPT-5 or Claude 4) make it appealing for startups and businesses that want to experiment with assistants without committing to premium pricing.

We recently published a dedicated comparison of ChatGPT and DeepSeek, exploring the features and best practices of using each one.

Meta LLaMA 4

As an open-source model, LLaMA 4 doesn’t aim to dominate every benchmark, but it consistently delivers solid results in reasoning and language understanding. Since it can be self-hosted, businesses can fine-tune it for specific domains or integrate it into private environments.

LLaMA 4 is often selected by enterprises with in-house ML expertise for training AI assistants on proprietary data and building domain-specific assistants. Its popularity stems from its low operating costs, primarily driven by infrastructure expenses rather than token usage fees.

Which AI Model to Choose for Your Rails Application

With so many options available, the real challenge is to choose the right one for your business case. To help, we’ve distilled our analysis into a side-by-side comparison.

| Model | Strengths | Best For | Cost Consideration | Industries Where It Fits Well |

|---|---|---|---|---|

| OpenAI GPT-5 | Top-tier reasoning, long-context handling (400K tokens), strong coding and analysis | Complex assistants that process documents, perform multi-step reasoning | Premium pricing; offset by reduced need for corrections | Legal, research, enterprise SaaS |

| Anthropic Claude 4 (Opus / Sonnet) | Natural, clear dialogue; reliable for long-form context (200K tokens) | Customer support, knowledge-heavy assistants, conversational bots | Opus priced near GPT-5; Sonnet faster and more cost-efficient | Customer service, HR tools, education |

| Google Gemini 2.5 Pro | Multimodal tasks, structured data handling, real-time search integration | Assistants that depend on live or external data sources | Cheaper than GPT-5 with strong benchmark results | Finance, healthcare, logistics |

| Meta LLaMA 4 | Open-source, customizable, self-hostable; strong reasoning foundation | Enterprises with ML expertise that need fine-tuning or privacy | Infrastructure-driven costs, no per-token fees | Government, defense, specialized enterprise |

| DeepSeek R-series | Reliable baseline performance with very low per-token cost | Experimentation without high overhead | Fraction of GPT-5/Claude costs; great for large query volumes | Startups, e-commerce, customer-facing bots |

Each system has strengths that make it more suitable for certain contexts. The right choice depends less on abstract scores and more on your business case. So how do you decide? A useful approach is to step back and ask yourself a few questions:

- What problem are we solving? Define the process before choosing the assistant.

- What is our budget tolerance? Estimate your typical request volume and compare total costs.

- How much scalability do we anticipate? Will the assistant stay in one role or expand to HR, finance, or supply chain automation later?

- What about compliance and data security? If you operate under strict regulations, make sure the assistant complies with privacy standards.

- How will we maintain it? AI assistants need continuous training, monitoring, and optimization to stay accurate and relevant.

The answers will guide you toward the model that aligns with your technical requirements and business priorities. If you’re unsure where to start or feel overwhelmed by balancing cost, accuracy, and scalability, our team can help. We’ll evaluate your product, domain, and workflows to recommend the model that delivers the most value in your context.

Avoid These Mistakes When Building AI Assistants

Even the most advanced model can underperform if the implementation is careless. Below are a few common pitfalls to watch out for and how to prevent them:

- Secure your API keys properly. Never hardcode tokens or keep them in shared config files. Store them in environment variables and make sure sensitive files are excluded from version control. This protects your assistant from accidental exposure.

- Don’t block the main thread. Long-running API calls or computations should be handled asynchronously. Use background job processors (e.g., Sidekiq, Resque) or non-blocking HTTP libraries so your application remains responsive even under heavy load.

- Keep the logic simple and modular. Avoid the temptation to build a “mega-agent” from day one. Start small, focus on core tasks, and structure the code into reusable, single-purpose modules. Regular refactoring helps keep technical debt under control.

- Stay mindful of token limits. Oversized prompts can lead to errors and inflated costs. Track token usage, streamline your inputs, and prune older conversation history when it’s no longer relevant. Optimized prompts mean lower costs and more reliable responses.

Proper implementation ensures that the assistant remains cost-effective. By following these practices, you maximize the impact of your chosen model.

Conclusion

Throughout the article, we’ve shown how Ruby on Rails, supported by a mature ecosystem of gems, enables integration of AI capabilities without unnecessary complexity. We’ve also explored use cases where assistants proved their value. The core insight: AI assistants represent a shift toward hyper-personalization and more natural human–machine interactions.

For RoR projects in particular, this shift is both technically achievable and business-wise rewarding. We believe that within the next few years, Ruby on Rails applications that integrate assistants will gain a decisive competitive edge in terms of customer engagement and cost efficiency.